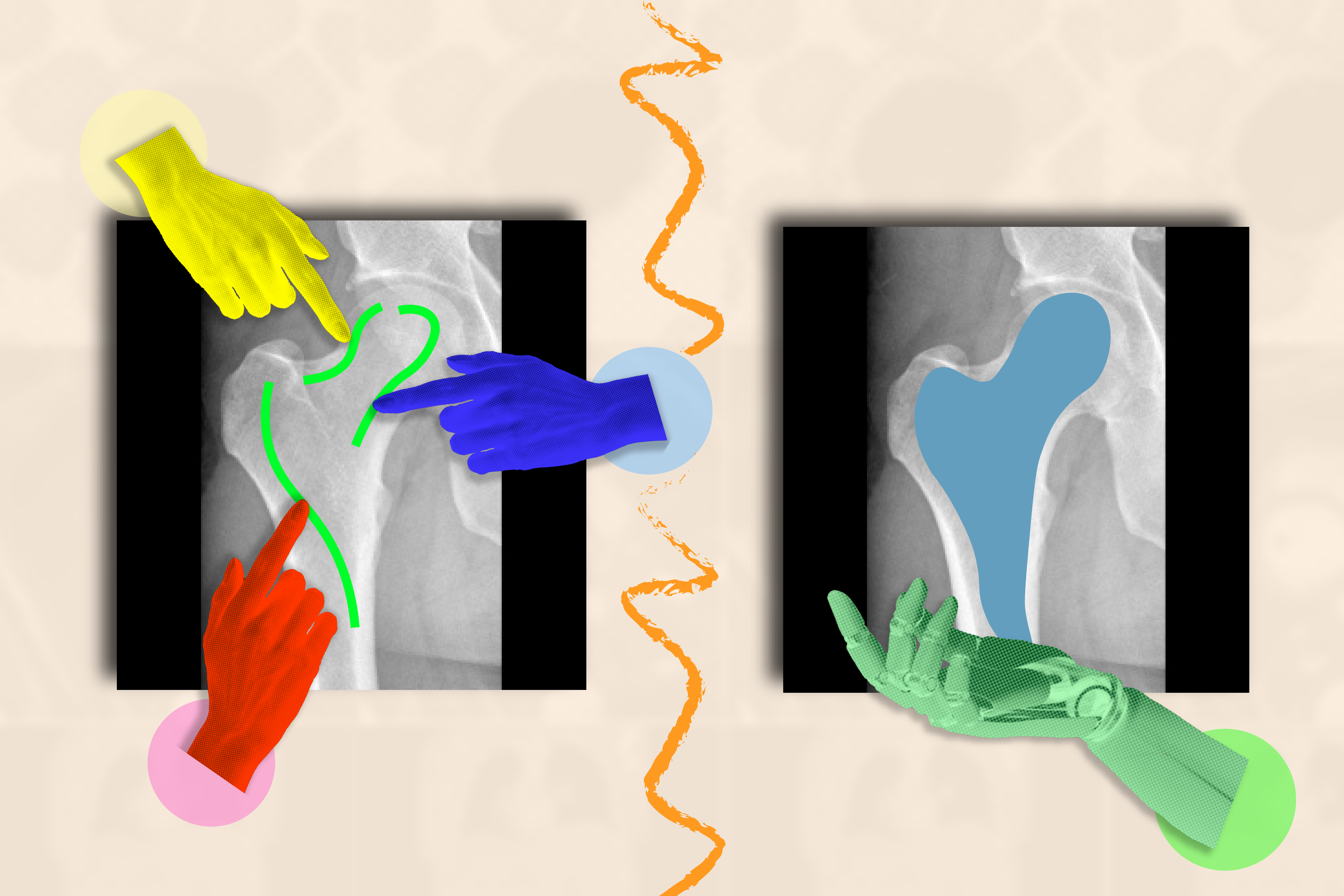

To the untrained eye, a medical picture like an MRI or X-ray seems to be a murky assortment of black-and-white blobs. It may be a wrestle to decipher the place one construction (like a tumor) ends and one other begins.

When skilled to know the boundaries of organic buildings, AI methods can phase (or delineate) areas of curiosity that docs and biomedical staff need to monitor for illnesses and different abnormalities. As a substitute of shedding treasured time tracing anatomy by hand throughout many pictures, a man-made assistant may do this for them.

The catch? Researchers and clinicians should label numerous pictures to coach their AI system earlier than it could actually precisely phase. For instance, you’d must annotate the cerebral cortex in quite a few MRI scans to coach a supervised mannequin to know how the cortex’s form can differ in several brains.

Sidestepping such tedious knowledge assortment, researchers from MIT’s Pc Science and Synthetic Intelligence Laboratory (CSAIL), Massachusetts Basic Hospital (MGH), and Harvard Medical Faculty have developed the interactive “ScribblePrompt” framework: a versatile instrument that may assist quickly phase any medical picture, even varieties it hasn’t seen earlier than.

As a substitute of getting people mark up every image manually, the workforce simulated how customers would annotate over 50,000 scans, together with MRIs, ultrasounds, and images, throughout buildings within the eyes, cells, brains, bones, pores and skin, and extra. To label all these scans, the workforce used algorithms to simulate how people would scribble and click on on completely different areas in medical pictures. Along with generally labeled areas, the workforce additionally used superpixel algorithms, which discover components of the picture with comparable values, to establish potential new areas of curiosity to medical researchers and prepare ScribblePrompt to phase them. This artificial knowledge ready ScribblePrompt to deal with real-world segmentation requests from customers.

“AI has important potential in analyzing pictures and different high-dimensional knowledge to assist people do issues extra productively,” says MIT PhD pupil Hallee Wong SM ’22, the lead writer on a new paper about ScribblePrompt and a CSAIL affiliate. “We need to increase, not change, the efforts of medical staff via an interactive system. ScribblePrompt is a straightforward mannequin with the effectivity to assist docs deal with the extra attention-grabbing components of their evaluation. It’s quicker and extra correct than comparable interactive segmentation strategies, lowering annotation time by 28 % in comparison with Meta’s Phase Something Mannequin (SAM) framework, for instance.”

ScribblePrompt’s interface is easy: Customers can scribble throughout the tough space they’d like segmented, or click on on it, and the instrument will spotlight the complete construction or background as requested. For instance, you possibly can click on on particular person veins inside a retinal (eye) scan. ScribblePrompt may mark up a construction given a bounding field.

Then, the instrument could make corrections primarily based on the person’s suggestions. In case you needed to spotlight a kidney in an ultrasound, you could possibly use a bounding field, after which scribble in further components of the construction if ScribblePrompt missed any edges. In case you needed to edit your phase, you could possibly use a “destructive scribble” to exclude sure areas.

These self-correcting, interactive capabilities made ScribblePrompt the popular instrument amongst neuroimaging researchers at MGH in a person research. 93.8 % of those customers favored the MIT method over the SAM baseline in enhancing its segments in response to scribble corrections. As for click-based edits, 87.5 % of the medical researchers most popular ScribblePrompt.

ScribblePrompt was skilled on simulated scribbles and clicks on 54,000 pictures throughout 65 datasets, that includes scans of the eyes, thorax, backbone, cells, pores and skin, stomach muscular tissues, neck, mind, bones, enamel, and lesions. The mannequin familiarized itself with 16 varieties of medical pictures, together with microscopies, CT scans, X-rays, MRIs, ultrasounds, and images.

“Many current strategies do not reply nicely when customers scribble throughout pictures as a result of it’s exhausting to simulate such interactions in coaching. For ScribblePrompt, we have been capable of drive our mannequin to concentrate to completely different inputs utilizing our artificial segmentation duties,” says Wong. “We needed to coach what’s basically a basis mannequin on numerous numerous knowledge so it will generalize to new varieties of pictures and duties.”

After taking in a lot knowledge, the workforce evaluated ScribblePrompt throughout 12 new datasets. Though it hadn’t seen these pictures earlier than, it outperformed 4 current strategies by segmenting extra effectively and giving extra correct predictions in regards to the precise areas customers needed highlighted.

“Segmentation is essentially the most prevalent biomedical picture evaluation job, carried out extensively each in routine medical apply and in analysis — which results in it being each very numerous and a vital, impactful step,” says senior writer Adrian Dalca SM ’12, PhD ’16, CSAIL analysis scientist and assistant professor at MGH and Harvard Medical Faculty. “ScribblePrompt was fastidiously designed to be virtually helpful to clinicians and researchers, and therefore to considerably make this step a lot, a lot quicker.”

“Nearly all of segmentation algorithms which have been developed in picture evaluation and machine studying are at the least to some extent primarily based on our capability to manually annotate pictures,” says Harvard Medical Faculty professor in radiology and MGH neuroscientist Bruce Fischl, who was not concerned within the paper. “The issue is dramatically worse in medical imaging during which our ‘pictures’ are usually 3D volumes, as human beings don’t have any evolutionary or phenomenological purpose to have any competency in annotating 3D pictures. ScribblePrompt permits handbook annotation to be carried out a lot, a lot quicker and extra precisely, by coaching a community on exactly the varieties of interactions a human would usually have with a picture whereas manually annotating. The result’s an intuitive interface that permits annotators to naturally work together with imaging knowledge with far higher productiveness than was beforehand attainable.”

Wong and Dalca wrote the paper with two different CSAIL associates: John Guttag, the Dugald C. Jackson Professor of EECS at MIT and CSAIL principal investigator; and MIT PhD pupil Marianne Rakic SM ’22. Their work was supported, partly, by Quanta Pc Inc., the Eric and Wendy Schmidt Middle on the Broad Institute, the Wistron Corp., and the Nationwide Institute of Biomedical Imaging and Bioengineering of the Nationwide Institutes of Well being, with {hardware} assist from the Massachusetts Life Sciences Middle.

Wong and her colleagues’ work will likely be introduced on the 2024 European Convention on Pc Imaginative and prescient and was introduced as an oral speak on the DCAMI workshop on the Pc Imaginative and prescient and Sample Recognition Convention earlier this 12 months. They have been awarded the Bench-to-Bedside Paper Award on the workshop for ScribblePrompt’s potential medical influence.