The rise of generative AI (genAI) over the previous two years has pushed a whirlwind of innovation and a large surge in demand from enterprises worldwide to make the most of this transformative know-how. Nonetheless, with this drive for speedy innovation comes elevated dangers, because the strain to construct shortly usually results in reducing corners round safety. Moreover, adversaries at the moment are utilizing genAI to scale their malicious actions, making assaults extra prevalent and probably extra damaging than ever earlier than.

To handle these challenges, securing enterprise purposes that make the most of genAI requires implementing elementary safety controls to guard the spine infrastructure. This infrastructure powers the purposes and has entry to huge quantities of enterprise knowledge the purposes depend on. By guaranteeing these safety fundamentals are in place, enterprises can belief these purposes as they’re deployed throughout the group.

The following genAI evolution: The emergence of AI brokers

The evolution of genAI is quickly transitioning from being a content material creation engine and a co-pilot for people to changing into autonomous brokers able to making selections and performing actions on our behalf. Though AI brokers should not broadly utilized in main manufacturing environments right this moment, analysts predict their speedy adoption within the close to future as a result of monumental advantages they create to organizations. This shift introduces important safety challenges, significantly in managing machine identities (AI brokers) that won’t at all times carry out predictably.

As AI brokers turn out to be extra prevalent, enterprises will face the complexity of securing these identities at scale, probably involving 1000’s and even hundreds of thousands of brokers working concurrently. Key safety concerns embody authenticating AI brokers to numerous techniques (and to different AI brokers), managing and limiting their entry and guaranteeing their lifecycle is managed to stop rogue brokers from retaining pointless entry. Moreover, it’s essential to confirm that AI brokers are performing their meant capabilities inside the providers they’re designed to assist.

As this know-how continues to evolve, extra insights will emerge on greatest practices for integrating AI brokers into enterprise techniques securely. Nonetheless, what is evident is that securing the backend infrastructure powering your genAI implementations will probably be a prerequisite for working AI brokers on a platform that’s inherently safe.

Addressing rising safety challenges

The very first thing that will come to thoughts when contemplating the safety of genAI is the necessity to safe these new and thrilling applied sciences as they emerge in the true world. These are important challenges as a result of speedy tempo of change, the introduction of latest kinds of providers and capabilities and ongoing innovation.

As with every interval of serious innovation, safety controls and practices should be tailored. In some instances, innovation could also be required to handle challenges that didn’t exist earlier than. The rise of genAI is not any exception, bringing distinctive safety considerations that demand steady innovation. One instance is defending genAI-powered purposes from assaults comparable to immediate injection. These assaults could cause the applying to show delicate knowledge or carry out unintended actions.

Nonetheless, it’s vital to do not forget that, like all utility, genAI-powered apps are constructed upon underlying techniques and databases. Enterprise purposes utilizing genAI will probably be susceptible to probably devastating assaults if this spine infrastructure just isn’t secured appropriately. Attackers can leak giant swaths of delicate knowledge, poison knowledge, manipulate the AI mannequin or disrupt the system’s availability and buyer expertise.

Many identities require entry to the backend infrastructure, every representing a excessive stage of danger and certain targets for attackers. Id-related breaches stay the main reason for cyberattacks, giving attackers unauthorized entry to delicate techniques and knowledge. Recognizing these identities, their roles, entry necessities and securing them are crucial priorities.

Luckily, the measures to safe identities inside this spine infrastructure are the identical identification safety greatest practices you possible use to guard different environments, significantly your cloud infrastructure, the place most genAI elements will probably be deployed.

Inside enterprise genAI-powered purposes

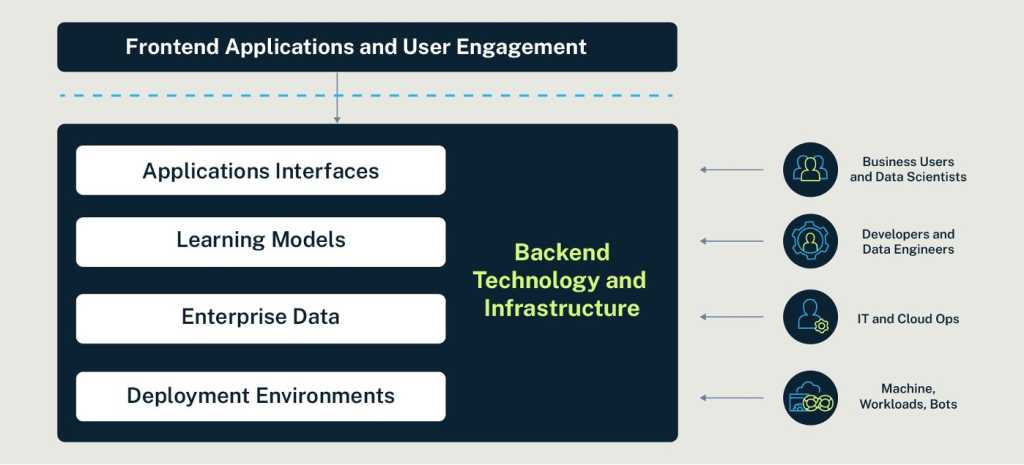

Earlier than diving into the really useful safety controls and method, let’s briefly assessment a few of the key elements and constructing blocks of genAI-powered purposes, together with the identities that work together with these elements. This overview isn’t meant to be complete however consists of some important approaches and areas to think about, particularly for elements and providers usually hosted or managed by your group.

Listed here are some crucial elements to think about:

- Software interfaces: APIs act as gateways for purposes and customers to work together with genAI techniques. Securing these interfaces is crucial to stop unauthorized entry and guarantee solely legit requests are processed.

- Studying fashions and LLMs: These algorithms analyze knowledge to establish patterns and make predictions or selections primarily based on that knowledge. They’re educated on huge quantities of information to develop highly effective purposes. Most enterprises will make the most of one or a number of main LLMs from main international gamers comparable to OpenAI, Google, and Meta. Public knowledge trains the LLMs, however to develop high-performing, cutting-edge purposes, you will need to additionally prepare them on the distinctive knowledge that provides your enterprise a aggressive edge.

- Enterprise knowledge: Information fuels AI, driving machine studying algorithms and insights. Leveraging inner enterprise knowledge is vital to creating distinctive and impactful enterprise genAI-powered purposes. Defending delicate and confidential data from leaks or loss is a high concern and a prerequisite for rolling out these purposes.

- Deployment environments: Whether or not on-premises or within the cloud, safe the environments the place you deploy AI purposes with stringent identification safety measures.

CyberArk

In the end, applied sciences, providers and databases in your cloud atmosphere or knowledge heart kind the backend infrastructure behind genAI-powered purposes, and you will need to shield them like all different techniques.

Implementing strong identification safety measures for every of those components is important to mitigate dangers and make sure the integrity of genAI purposes.

Implementing strong identification safety controls

Varied identities have excessive ranges of privilege to the crucial infrastructure powering enterprise genAI purposes. Ought to their identification be compromised and authentication bypassed, it leaves an enormous assault floor and a number of avenues of entry to a possible attacker. These privileged customers transcend the IT and cloud operations groups that construct and handle the infrastructure and entry. They embody (and should not restricted to):

- Enterprise customers assigned to research tendencies within the knowledge, enter their experience and supply validation.

- Information scientists develop fashions, put together datasets and analyze the info.

- Builders and DevOps engineers who handle the databases and, along with IT groups, are liable for constructing and scaling the backend infrastructure, both straight or via automated scripts.

A hijacked developer identification may grant an attacker privileged learn and write entry to delicate code repositories, core cloud infrastructure administration capabilities, and confidential enterprise knowledge.

We should additionally do not forget that this genAI spine is sprawling with machine identities that permit techniques, purposes and scripts to entry sources, entry and course of knowledge, construct, handle and scale infrastructure, implement entry and safety controls and extra. As with most trendy IT and cloud environments, you possibly can assume there will probably be extra machine than human identities.

With such excessive stakes, following a zero belief, assume breach method is vital. Safety controls should transcend authentication and primary role-based entry management (RBAC), guaranteeing a compromised account doesn’t depart a big and susceptible assault floor.

Contemplate the next identification safety controls for all of the kinds of identities outlined above:

- Implementing sturdy adaptive MFA for all consumer entry.

- Securing entry to, auditing use and often rotating credentials, keys, certificates and secrets and techniques utilized by people and backend purposes or scripts. Be sure that API keys or tokens that can not be mechanically rotated should not completely assigned and that solely the minimal needed techniques and providers are uncovered.

- Implementing zero standing privileges (ZSP) may help make sure that customers don’t have any everlasting entry rights and may solely entry knowledge and assume particular roles when needed. In areas the place ZSP just isn’t an choice, goal to implement least privilege entry to reduce the assault floor in case of consumer compromise.

- Isolating and auditing periods for all customers who entry the GenAI spine elements.

- Centrally monitoring all consumer habits for forensics, audit, and compliance. Log and monitor any modifications.

Balancing safety and usefulness in genAI tasks

When planning your method to implementing safety and privilege controls, it’s essential to acknowledge that genAI-related tasks will possible be extremely seen inside the group. Improvement groups and company initiatives could view safety controls as inhibitors in these eventualities. The complexity will increase as you’ll want to safe a diversified group of identities, every requiring completely different ranges of entry and utilizing varied instruments and interfaces. That’s why the controls utilized should be scalable and sympathetic to customers’ expertise and expectations whereas guaranteeing they don’t negatively affect productiveness and efficiency.

Obtain “The Id Safety Crucial” for insights on methods to implement identification safety utilizing sensible and confirmed methods to remain forward of superior and rising threats.